Hey Readers!

In the last post we saw the neuron getting activated. That is an important step in forward propagation. In this post we will see about the different error functions and cost functions that give the feedback for a network. In other words they are important in back-propagation (backprop).

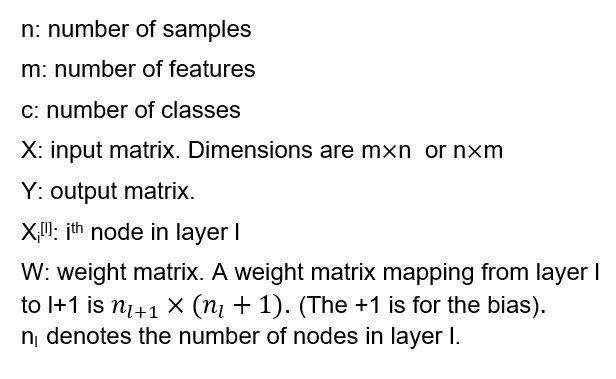

The Representation

One of the important basics of NN’s that we have to know is how the NN is represented on paper. This will help you understand the research papers or any other information that you read related to it. We will see the standard notations used for NN. (You might need to refresh your matrix basics. After all, most of DL is based on matrices)

So when we want to calculate a weighted sum, we multiply the weight matrix W with X. Let’s walk through a simple NN example to understand the matrix operations.

The Bias

The bias terms may or may not be explicitly mentioned. For a 1D vector they are added as an additional unit in the layer. Their default value is 1. The yellow colored units in the previous diagram show the bias unit.

For 2D matrices, they are added as a vector with the weighted sum. So a bias vector of size n l+1 × 1 is added. Thus the computation is WX+b (as seen in the previous post).

Why bias?

Bias helps us to improve the convergence of the neural network. In simple words, it helps learning. In almost every math problem, we love it when we have zeroes. But not in NN. So we have to change it to some non-zero value before we can proceed further. You can check this site for further reference.

Loss Functions

Loss Functions measure the difference between the predicted values and the actual values. This is termed as loss.

Mean Squared Error

This is an important loss function used for Regression problems (where the output is continuous. For example house rent prediction problem).

A simple variant is the Root Mean Squared Error. It’s value is the square root of MSE.

Binary Cross Entropy Loss

This is the most common loss function for binary classification problems. This is also a logarithmic loss.

y is the actual value and p is the predicted label. The following formula is just for one example.

Let’s try to understand the intuition behind this function.

We have to minimize L. We know that higher the magnitude of the negative number, lesser is it’s value. Thus we aim to maximize the RHS (the part inside the square brackets) in order decrease the loss.

If y=1, then T2 becomes zero. We have -log(p) on the RHS. Since our aim is to maximize the RHS we increase the value of p. Intuitively, we know that a higher value of p means that the example is true and this is achieved.

If y=0, then T1 becomes zero. We have -log(1-p) on the RHS. We need a lesser value of p. Only then 1-p will result in a higher value and this will maximize the RHS and thereby reduce the loss. Intuitively we know that a lower value of p means that the example is negative and this is achieved.

I hope I have made it clear. I know it’s a bit paradoxical. But just re-read it once with the formula and you are good to go.

Categorical Cross Entropy

This is the most popular loss function used for multi-class classification (MCC) problems. As we have already seen, the outputs for MCC are one-hot vectors.

Let assume that there are c classes in total. The following formula is just for one example.

The intuition behind this is really simple.

For any example, y i is 1 only for one class. Hence we have p i corresponding to that class. Same as before, we need to maximize the RHS in order to reduce the loss. Thus we increase the value of predicted value p for the correct class. Concretely we are trying to make the classifier predict a higher value for the correct class.

Voila! We have now learnt to quantify the performance of a NN.

The other loss functions are hinge loss, huber loss, Kullback Leibler Divergence Loss which you can learn based on your interests. But the 3 loss functions I have talked about here are enough to build great NN.

Cost Function

We talked about the performance of the model with respect to a single example. Now it’s time to quantify the performance of the model as a whole.

The cost function is actually used to update the weights in the network.

The cost function C is a function of the output y, weights w, input X and bias b. We sum over the losses computed by the loss function L and take their mean. We have to minimize the cost function in order to improve the performance of the neural network.

(The negative sign that we saw in the loss function is the negative sign here. It’s not an additional negative sign and so it does not cancel out the minus sign in L.)

So let’s go dive deeper into the concept of Cost Function.

We have understood that the cost function quantifies the performance of the NN and we need to reduce the cost. Thus we have reduced the problem of classification to a problem where we have to minimize the cost function. Hmm… Interesting..! We are simplifying the concept of NN to simpler math now.

You must be familiar with the concept of local minimum and local maximum in calculus. Our aim is to reach the global minimum of the cost function.

Image Credits: engmrk.com

We take the derivative of the cost function and “propagate” these derivatives across the network and update the weights and biases. We’ll see that in detail in the next post on backpropagation.

The cool thing about cost function is that you can develop your own cost function. But it must be differentiable. Only then the NN can learn using that cost function.

This brings us to the end of this post. In the next post we will see about backpropagation and completely understand how a model learns!

Thanks for reading! Subscribe to our mail letter to receive regular updates from the blog!